.NET String Theory

I love talking with real customers; no matter how many times you do it you discover new uses for your product and new edges you never knew where there before. We’d designed Gibraltar with certain sizing assumptions based on predecessor systems we’ve created for clients and feedback from the beta period. One thing you can always count on is that no matter how much you optimize a system, users will push it until it breaks.

We were talking with a customer recently that was using Gibraltar to instrument a system that produced 150MB of data in a two minute test run. Now, we use a very aggressive amount of compression so it takes a lot to generate 150MB of data - millions of data points. What was interesting is that it caused a great deal of memory to be used up both in the agent and in the Analyst when the session was opened. Digging through the details, we worked out that nearly all of the memory was being used for strings, most of which were in memory many times because they came from different places.

Our data compression format already pushes string reuse when reading data, but reusing strings while collecting is more challenging (because they’re coming from outside of Gibraltar). Additionally, strings happen in places you don’t expect - like every time you display a value, you’re inevitably converting it into a string.

One approach to help with this is Interning strings which gives you a way to take a live string and swap it for the one common object. This leverages the fact that strings are immutable in .NET so if two strings are the same they’re interchangeable. Unfortunately, .NET takes interning to an extreme - once you intern a string, it stays in memory until the AppDomain is unloaded. For our purposes that’s a nonstarter, it would be a huge memory leak and kill the Analyst (which in extreme cases needs to load 1GB of data, then drop it once the session is closed).

Cutting Strings down to Size

Instead, we’ve spent a few day writing and testing a single instance string store for both the Agent and Analyst. Initial results are very encouraging - we were able to reduce memory utilization in some extreme cases (like using a very large Live Viewer buffer on a running application) down by 90% with only a 5% processor load penalty. Better yet, since the Agent is designed to be asynchronous we’re doing all of this optimization work behind the curtain where it doesn’t affect application response time.

Many users won’t notice a difference - if you’re not using the Live Viewer or don’t log much there isn’t much to optimize (and the overhead of the single instance store is zero). If you have a lot of RAM then it won’t really matter because the OS will just throw things at your app anyway. But when you want to go crazy and log a great deal of data and view it in real time it’s great to have that safety buffer there.

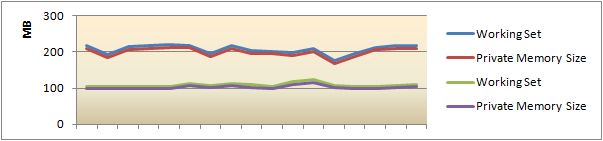

As a typical example, you can do a before and after comparison of our dynamic binding sample app: We reduced the working set of the application by 128MB under heavy logging. You never know when that extra memory will come in handy, and I love that we’re doing things that ensure you never need to worry about what our Agent is up to when running your app. Here’s a chart of the application running on a system with a great deal of memory (so there is no memory pressure) logging over 2.8 Million messages:

Memory Usage for Sample Application (2.8 Million Messages)

The only difference between the two applications is the version of our agent (the upper lines are Agent build 414, the lower is the latest internal build 430). Keep in mind the improvements are not percentage but absolute. Since the sample app does nothing but log it has a disproportionate effect.

The other thing you have to remember is that you really only have about 1.5GB of memory to work with as a 32-bit process, so doing what you can to both reduce fragmentation of RAM and keep your footprint down for the really extreme cases is great. This single instance store trick is particularly useful because the more strings there are, the higher the probability the next one is already in the list. This means the amount of memory reduction increases with the amount of memory used.

Give it a spin on your project

You can try this all for yourself - check out our article on CodeProject where you can download the complete source code so you can get these benefits for your own project!