Gibraltar 3.0 New Feature Dive - Super sized Sessions

A major challenge customers have had with Gibraltar 2.0 is working with long running and large sessions. This stems from the early on design decision to target smart client applications for the first releases of Gibraltar. From this decision we made a series of assumptions - how large sessions would be, how people would want to count and interpret them, when they would get sent in, etc. For example, early beta versions we assumed by default that you only wanted to keep 50MB of total log data and each session would be around 5MB. Looking back, this just isn’t how things turned out at all.

Once you start using Gibraltar with server applications - like web sites or even windows services then a few things happen:

- Long Life: Gibraltar equates a session lifetime to the application domain it runs in. For windows services this could be months, possibly even years. For web sites it’s typically a day or less.

- Session Size: It’s hard to imagine in a single user smart client recording more than 50MB of information. Our analysis of log files we receive and our customers use indicates this is a pretty fair assumption. For a busy corporate line of business web application you can easily generate 500MB of log data each day.

- Submitting Sessions: By default, Gibraltar waits for a session to end before sending in the data. This works well with end-user applications but doesn’t match up with operating your own web sites or services. What use is it to find out a month later your Windows Service was having a problem? You could trigger sending data manually and even when an error happened, but if your session got to large this could be dangerous because we merged together all of the data first to send it.

The biggest problems people have with using Gibraltar 2.x in these situations is:

- If you log a lot of data, you need a lot of memory and processor to submit the data to be able to view it - about five times as much as the log data. To add insult to injury, every time it submitted the data it pushed it all to the server, and that got completely re-analyzed for issues then copied down to each Analyst. Very inefficient.

- You had to add code to your application to explicitly push the session to the server to see any of the data. And when you did you could run into the first problem.

No question about it, this sucks and it had to stop.

Editor’s Note: Not all of the optimizations discussed below have shipped in Gibraltar 3.0 Beta 2.

Smaller Data Files

Our original compression approach was based on a sampling of files between 5 and 50MB. It turns out that the files we really should worry about were in the 50 to 500MB range. Saving 25% on a 5MB file isn’t likely to impress anyone. Cutting 100MB off a log file makes a real difference. We’ve substantially revised how we compress files and will get an average of 65% reduction in size for typical files. That’s right - they’re now 1/3rd as big. This is all done with no impact on the throughput of the log system because of the asynchronous, queued approach Gibraltar uses to record data.

We also reduced the memory it takes in the Agent to perform this compression. Previously it was possible to use up to your maximum log file size in RAM (in extreme cases) while logging. To avoid the problem of merging fragments (which uses even more memory) some customers ran into this when they dramatically upped their log file sizes. Now the compression uses a fixed buffer size measured in KB to ensure it’s both efficient and predictable.

These smaller files have another advantage: We previously zipped files before they were sent to the hub to save network time. This would get us about a 40% reduction in file size but took time, processor, and disk space. With the new scheme there’s no advantage to doing this - the files would actually get slightly larger. So we can skip this step which saves time on both the client and server.

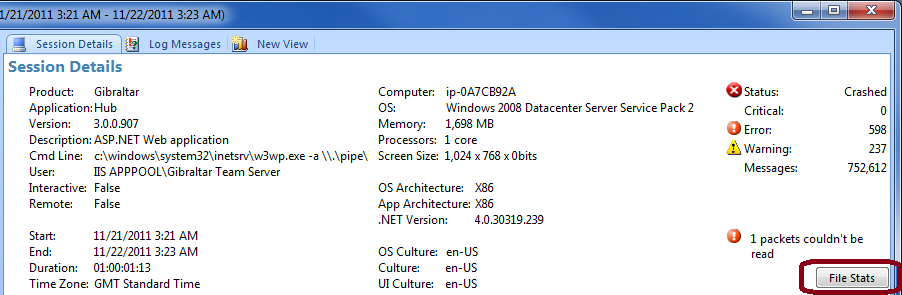

We’ve also added a feature to let you understand why your data files are as big as they are. In Analyst, you can open a session and then click File Stats on the Session Details tab.

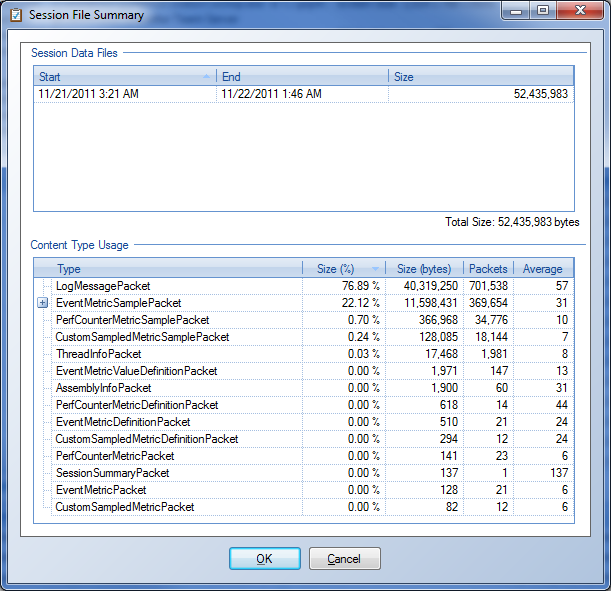

Here’s the analysis we get for one of our Gibraltar Hubs we’re using for testing. It logged about 700,000 messages in a 24 hour period.

You can see that the average size of each log message is 57 bytes. Now that’s good stuff from a compression standpoint. The process also recorded a fair number of metrics and performance counters. There are metrics for each data operation, web service call, etc. and these add up to a total of about 350,000 individual samples at an average size of 31 bytes. All of the performance counter samples - 35,000 of them - came in at an average of 10 bytes. All in, the file was just over 50MB.

From this information you can get a good feel for what’s driving your log file size. Now, you may never care - but when you get into logging millions of messages and millions of metrics then it’s good to have around.

Incremental Data Files

For Gibraltar 3.0 we’ve changed it so that the individual file fragments Gibraltar stores data into (each representing a period of time) are never merged together - all the way down to Analyst. When data needs to be made available Gibraltar has always rolled over the current log file (called a session fragment) and started a new one. Previously these fragments all had to be merged together before the data could be sent anywhere off the computer. Now they’re each managed individually. Thanks to this change, each time your application wants to send data to the Hub only the fragments that haven’t already been sent are processed. Likewise when this data needs to be analyzed for issues or sent down to clients that want to view the details it’s just the new information.

We’ve also gone through the processes that send data - whether it’s to the Hub server, email, or files - and worked to eliminate unnecessary file copies that were being done previously in an overabundance of copies (these get expensive if you have a 300MB file) and minimize the memory required. The bottom line is that you can safely send session data at any time - regardless of how much data you’ve logged or how long your application’s been running.

Let Your Application Run Forever

Making the session files smaller and keeping them in fragments helps with large sessions, but when you have a really long application there are a few other things that kick into play. First, you want to make sure you can discard older log data off the computer (to keep down local disk space) even while the application was still running. In many cases, Gibraltar 2.x would end up throwing away the start of the session and all you’d have is the last 150MB of data (if you used the default limits). For 3.0, session fragments can be sent automatically as soon as the log file rolls over for any reason. The default limits of 50MB and one day mean that for each Windows Service you’d at least get a fragment every day - which would be sent up to the server and then pruned locally. You can still trigger sending data more often from within the application like you used to and it’ll work great.

The Hub server works better with this as well - it will analyze each fragment without having to load up information it’s previously worked through. This means you get steady updates and the memory required on the server is dramatically reduced. We’ve had customers with Gibraltar 2.x that had servers perpetually behind analyzing sessions because by the time they analyzed a large file they received another copy of it with just a modest amount of new information. Now this is a non-issue because the server can load just the new data and check it.

Of Course, You’d Like to see All of That

The final problem area for Gibraltar with very large sessions was viewing them in Analyst. Historically you could load up a session with between 1.2 and 2 million messages. More than that and it’d fail with an out of memory exception. This was because Gibraltar Analyst was a 32-bit only application. We’ve rewritten our graphing system to use the DevExpress XtraCharts like we use for Charting and that means we’re good to go for 64-bit in Analyst. If you have 8GB of RAM, you can use all of it for session data if you want. In practice this means you’re still limited to a session with perhaps 10-15 million messages. We’re going to work on that in a future release to really achieve our goal of infinite logging.

This is all fine and good, but it’s still missing something… The ability to handle the server-on-fire-right-now scenario we’ve all experienced at some time in our career. For the story on that, stay tuned for our next deep dive!